The answer will depend largely on whether the materialists or the dualists are right. Is matter all there is, or is there something more? Are our brains just meat computers, or do we have a mind that is distinct from our brain?

Before we talk about consciousness we should try to define it and ask what causes it, but that leads us into a can of worms.

Check out what Anil Seth had to say on the NewScientist website

Consciousness is, for each of us, all there is: the world, the self, everything. But consciousness is also subjective and difficult to define. The closest we have to a consensus definition is that consciousness is “something it is like to be”. There is something it is like to be me or you – but presumably there is nothing it is like to be a table or an iPhone.

How do our conscious experiences arise? It’s a longstanding question, one that has perplexed scientists and philosophers for hundreds, if not thousands, of years. The orthodox scientific view today is that consciousness is a property of physical matter, an idea we might call physicalism or materialism. But this is by no means a universally held view, and even within physicalism there is little agreement about how consciousness emerges from, or otherwise relates to, physical stuff.

The emphasis on the word ‘subjective’ is mine. The word \’subjective’ is a showstopper. It means there is no objective scientific test we can do on consciousness. Here is what MIT Professor, Rodney Brooks had to say about consciousness in his 2002 book Flesh and Machines; How Robots will Change Us

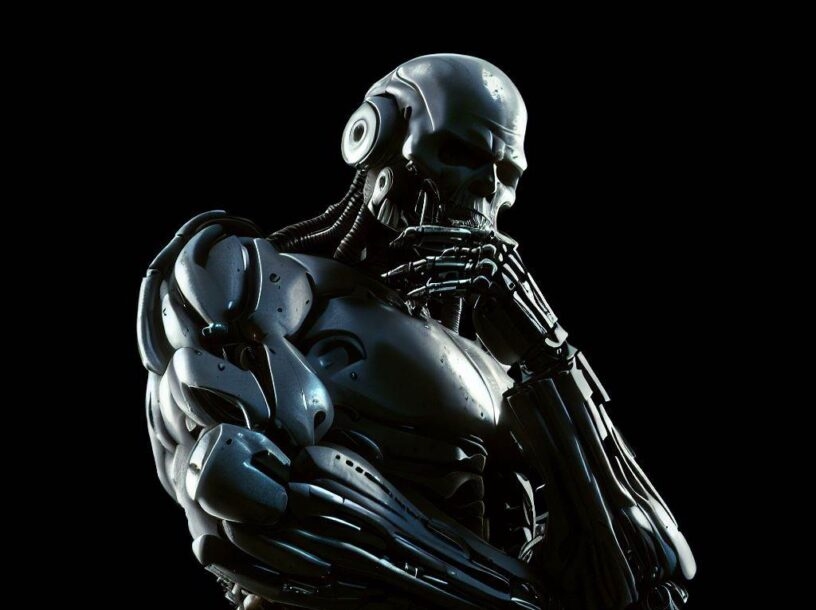

In my opinion we are completely prescientific at this point about what consciousness is. We do not know exactly what it would be about a robot that would convince us that it had consciousness, even simulated consciousness. Perhaps we will be surprised one day when one of our robots earnestly informs us that it is conscious, and just like I take your word for your being conscious, we will have to accept its word for it. There will be no other option.

So right there we have our answer to the second part of the title’s question. We can’t know for sure. We can’t know in a way that is scientific and objective. What we conclude will largely come down to our world view and opinions, but even then, 100% certainty isn’t guaranteed.

If our minds are not just a function of our brains then that makes it even harder. How do you examine something non material?

Dr Michael Egnor is a neurosurgeon who moved from a materialist world view to become a dualist. In this video he sites various authorities and mentions several points that he sees as questioning the material view of the mind. He talks about how experiments probing the brain can induce movement of arms and induce memories but not higher functions like ‘calculous’. While some materialists see ‘split brain’ patients as exhibiting two different personalities, Egnor insists that apart from specialised experiments the patients still have a unique identity. He talks about the kinds of seizures people can have, seizures that cause muscle spasms, but he states that no one ever has a higher function seizure that cause the patient to do ‘calculous’. He concludes that the materialistic world view can be a hindrance to understanding consciousness.

In the book, The Spiritual Brain: A Neuroscientist\’s Case for the Existence of the Soul, Mario Beauregard explains that there is no part of the brain that is never active when we are not conscious and that there is no part of the brain that is always active when we are. The implication is that consciousness is not localisable in the brain.

Explaining consciousness within a strict materialist worldview is problematic. Rodney Brooks more or less contradicts himself in this video. On the one hand he states that AI can be conscious but when pressed he called consciousness and illusion.

In my opinion when you see consciousness as an artifact of the brain and if it is hard to explain why it came to be (from a materialist’s perspective), you are forced to see consciousness as an illusion. What is ironic is that they conclude that while they are having the conscious thought that consciousness is an illusion. So what is a first responder supposed to do when determining if the patient is conscious; does she call the philosophy department or just behave as if the patient is not? Okay, I’m being facetious, but all the same, if something is too hard to explain within your world view, especially the reason for it existing in the first place, then just sweep the problem under the rug, right?

So, given all that, how would you go about making a conscious robot? Well if consciousness is an illusion then it wouldn’t matter. Simulating intelligence would be enough. If you find that satisfying then good for you. If you think that consciousness is real then we have a huge problem. When I write computer software, I need a specification and I need to understand it. Random keystrokes wont do it. Guessing will often be misleading. What if we guess that complex things with some kind of energy is conscious and assume that computers are already conscious? John Searle presented a thought experiment to show why he rejected that idea. He called it the Chinese Room. It is described on the Stanford website here.

Imagine a native English speaker who knows no Chinese locked in a room full of boxes of Chinese symbols (a data base) together with a book of instructions for manipulating the symbols (the program). Imagine that people outside the room send in other Chinese symbols which, unknown to the person in the room, are questions in Chinese (the input). And imagine that by following the instructions in the program the man in the room is able to pass out Chinese symbols which are correct answers to the questions (the output). The program enables the person in the room to pass the Turing Test for understanding Chinese but he does not understand a word of Chinese.

Searle has his critics but it is still a useful analogy, it still helps people understand the processing that goes on inside computers and their lack of understanding when processing symbols.

I am a dualist, but even if I allow for consciousness being situated entirely in our brains, I still don’t see how we could be 100% certain that AI will ever truly be conscious. Can a hope-for-the-best attitude work? Is it like finding a needle in a haystack? How big is the haystack? Is it like finding a needle in an infinite number of haystacks? For me it comes down to the subjective nature of consciousness. We can never be certain scientifically. But we can think about it and we can be aware of ourselves thinking about it. I seriously doubt that computers will ever really think about their own thoughts in any human like way.